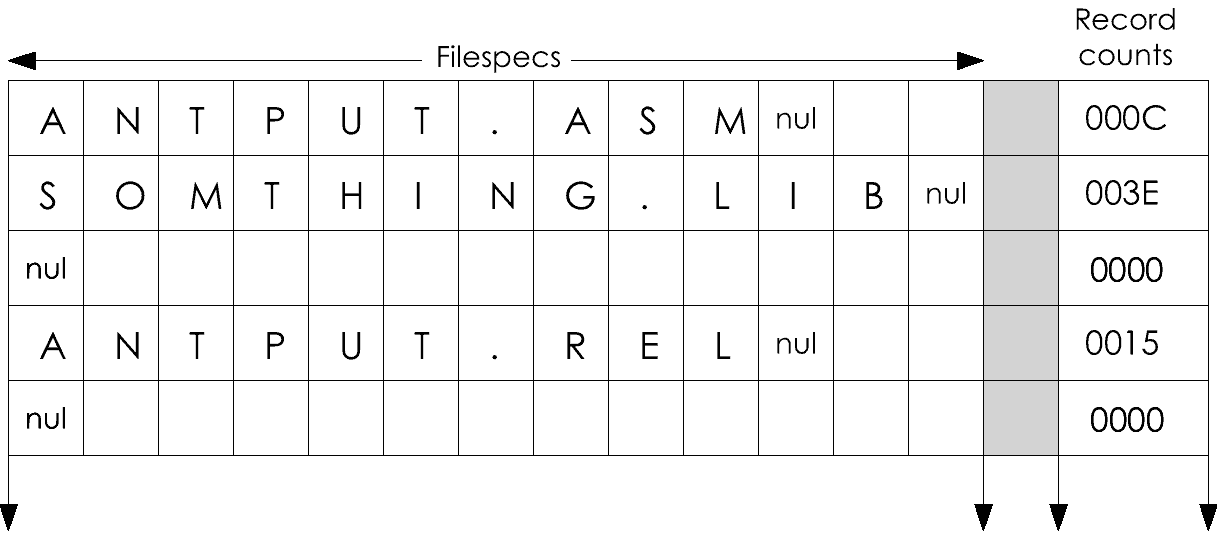

Figure 8-8. The layout of array FS in storage.

In this 1985 book, as in its predecessor (A Programmer's Notebook, 1983) I was trying to demonstrate a professional's thoughtful, systematic approach to software design for the benefit of the generation of enthusiastic amateur programmers that sprang up with the advent of small, cheap computers.

The book did not do well commercially, for a couple of reasons that are obvious in hindsight. First, my own choices: I used RMAC and its associated LINK and LIB tools. There was nothing wrong with these programs as tools, and the ability to build a library of relocatable object modules is absolutely essential to modular software design. However, the RMAC/LINK/LIB package was an extra-cost purchase from Digital Research, and most of the CP/M programmers who might have bought the book didn't have them and weren't interested in getting them.

I felt (and still feel) it was essential to exploit the Z80's instruction set, but once I'd chosen RMAC I had to use the Intel-like assembler mnemonics and syntax that it supported for the Z80 instructions. That put another barrier in the reader's way. However, relocating assemblers that supported Zilog's more appealing syntax were even less available and more costly than RMAC.

What really doomed the book, however, was that it was ridiculously late to market. By the time it reached print the IBM PC and MS-DOS had completely taken over the world of personal computing. Z80-based CP/M computers were still being sold, but hardly anyone cared. Had I written the same book but for the 8086 and IBM's assembler, the story might have been different.

Twenty-five years later, it seems there are still a few CP/M programmers in the world. For their sakes I am putting the book online. A few typos have been corrected and the figures have been redrawn and are somewhat clearer than the original. Otherwise it is the same. Look nearby for access a ZIP archive with the files distributed with the book.

This is a book of programs, and a book about programming. It contains a variety of software, all written in assembly language for a computer equipped with CP/M and a Z80 processor. The software is useful, but the book is about the process of constructing programs: how they are conceived, designed, and coded.

The book is in two parts. Part I contains complete programs, each worked out from an initial specification, through a design in a high-level pseudo-code, to working assembly language.

These programs are built from a kit of simpler parts: a comprehensive set of subroutines for manipulating integers, strings, and especially files. It brings to assembly language much of the convenience of the standard C runtime library, and it makes writing assembly language utilities a lot easier. Part II describes how that toolkit was designed and how it works.

The software in this book requires the CP/M-80 operating system (either CP/M 2.2 or CP/M Plus). It also requires a Zilog Z80 processor chip. I found that certain Z80 features were absolutely necessary to doing the file-handling modules right. While unavoidable, this is a pity; it means that such excellent dual-processor machines as the CompuPro and the Zenith Z-100, which use an Intel 8085 on their 8-bit side, cannot use these programs.

If you obtain the software in machine-readable form, you can execute the programs of Part I immediately. To modify them, however, you will need the RMAC assembler. To link the toolkit modules with programs of your own you must have the LINK-80 linker and the LIB library manager. RMAC, LINK-80, and LIB are products of Digital Research, Inc. (and trademarks as well). They are distributed free with CP/M Plus and are available separately for CP/M 2.2. There may be other assemblers and linkers that will work, but none have been tested.

Assembly language a bad reputation. Most books on programming tell you to use a simple language like BASIC, a clear one like Pascal, or an efficient one like C. I echo that advice: in many cases you should write programs in a higher-level language. This is especially true if you are new to programming. Until you are thoroughly at home with the fundamentals of programming -- until you know the loop, the subroutine, the array and the file in all their varieties, and have practiced breaking a problem down and composing a program from its pieces -- you should avoid assembly language. Once you have mastered those things, you are free to choose a language to suit the task at hand.

As compared to BASIC, Pascal, or C, assembly language is inherently more tedious and difficult to use. Worse, it is not portable; assembly-language programs can only run in the combination of processor and operating system for which they are written. However, assembly language code is normally much smaller and faster than the code generated by the simple compilers available for CP/M. And assembly language gives you access to all the features of the machine and operating system, including many that aren't available in higher languages. Sometimes speed and access to the hardware is crucial to the success of an application.

Commercial programmers avoid assembly language because its extra difficulty raises the cost of a project, while its lack of portability cuts down the size of the market. Still, I heard Mitch Kapor, president of Lotus Development, say that Lotus 1-2-3^ had to be written in assembly language because a prototype written in C was too big and slow to be saleable. And though Apple's MacIntosh owes some of the speed of its graphics to brilliant algorithms, its designers credit a well-honed assembly language toolbox for most of its speed.

Once you decide to use assembly language, your first concern should be to minimize the inevitable extra cost in effort. That's best done through a disciplined approach to design and coding, and by making full use of a library of subroutines. This book aims to demonstrate both those things.

I started out to do a simple update of an earlier book (A Programmer's Notebook: Utilities for CP/M-80, Reston, 1983; now out of print) to make it work under CP/M Plus. But the longer I looked, the less I liked the older book, especially its library of subroutines which was incomplete and had many inconsistencies.

For this book I began by designing and building a comprehensive toolkit of subroutines. I packaged them as functional modules and gave them macro interfaces that were as simple and as consistent as I could make them. (For example, all of the CP/M file operations, even to the testing and setting of file attributes, can be done with simple macro calls and no use whatever of the CP/M File Control Block.) Only then did I write the programs that would use the subroutines.

Half way through, I heard of a comparable package, called SYSLIB, that was in the public domain. I was careful to avoid seeing SYSLIB or any other subroutine package, so as to avoid even a hint of plagairism.

The work was finished in September 1984, just as the market for computer books in general, and books on CP/M in particular, collapsed like a pricked balloon. The publisher that had contracted for the book had, like much of the software industry, become fixated on the IBM Personal Computer. They decided the book wouldn't sell and gave it back to me.

Fortunately the present publisher realizes that there are more than a million CP/M machines in daily, productive use. The people who own them have been using computers longer than the typical PC owner, and since their abandonment by an industry they have become more knowledgeable and self-reliant. A lot of them, we hope, are getting ready to write their own software. Those are the people this book is for.

D. E. Cortesi

Palo Alto, 8/84 and 9/85

Programming is a craft like cabinetry or needlework. After all, a programmer, like any craftsperson, first draws a plan, then uses tools to shape materials in order to produce a useful object. This chapter is a brief review of the programmer's raw materials: the machine's instruction set and the assembler.

The review is necessary, but there can be no correct length for it. If you know little about assembly language, you'll find it too short and it will drive you out, fuming, to find one of the many good tutorials on the subject. If you know the subject well, the only interest will lie in whatever new light I can cast on old information.

If you're primed for disappointment, then, let's begin.

The heart of any computer is a Central Processing Unit (CPU). This chip has a limited repertoire of simple operations. It reads a stream of bytes from storage and decodes them as operations to perform. A program, when expressed as a sequence of bytes ready for execution by a CPU, is a machine-language program. The final goal of programming is to produce a machine-language program, but it's impractical to write programs in the binary numbers of true machine language.

A simple assembler makes it easier to write a program. An assembler is a program that translates symbols into machine language. It recognizes predefined symbols like "M" or "ADD" as standing for CPU operations or machine registers. It recognizes numbers written in decimal and hexadecimal digits and converts them to binary. It lets us define our own symbolic names for numbers and for locations in storage.

In Figure 1-1 you see a simple program. It's about as trivial as it can be; it writes one ASCII character, the control character BEL, to the console. I call it beep.

; BEEP -- toot the console

ProgramBase equ 0100h ; start of any program

BdosJump equ 0005h ; entry to the BDOS

BdosType equ 2 ; request to type 1 byte

AsciiBEL equ 7 ; the BEL byte

org ProgramBase

mvi c,BdosType

mvi e,AsciiBEL

call BdosJump

ret

end

Figure 1-1. A simple program written for an absolute assembler like asm.

I hope you have composed assembly programs as complicated as beep before now. If so, you know that what you see in Figure 1-1 is the source text of a program, which would be prepared with an editor program and stored as a file on disk. The file would, very likely, be named beep.asm.

The program could be assembled with the CP/M command

A>asm beep

The assembler, a program named asm, is called; it reads the source file and performs the translation from readable symbols to binary machine language. The translated program is called an object program. It is recorded as another file, beep.hex in this case.

That file is still not executable. In fact, although all the symbolic names have been stripped from it and replaced with their binary equivalents, beep.hex is still in a symbolic form. A hex file represents the machine-language program as a series of ASCII characters, one for each hex digit (group of four bits). This hex-file format was originally designed for storing object programs on paper tape, but it still has its uses.

CP/M includes a program that will convert the hex form of an object program to true, binary, machine language. It is called load in CP/M 2.2 and hexcom in CP/M Plus. The command

A>load beep

in CP/M 2.2, or

A>hexcom beep

in CP/M Plus will read the file beep.hex. The assembler's output will be converted to binary and written as a new file, beep.com. This file is an exact copy of the machine language program as it must appear in storage before the CPU can execute it. Once beep.com exists, the operator may give the command

A>beep

In response, CP/M will copy beep.com into storage and apply the CPU to it. The result will be a single "beep" from the terminal.

All assemblies go through these two translation steps. First the assembler converts symbols to binary values and records them in an intermediate file. Then a second program converts the intermediate file into an exact, binary image of the program. The operating system can load this image as a command, and run it.

In a simple assembly, the assembler is asm, the intermediate file is a hex file, and the second program is load (or hexcom). In the rest of this book, however, we'll use a relocating assembler, rmac, and a program linker, link, as the second program.

In order to construct a program of machine instructions, we have to know what instructions are available for use. A CPU's repertoire, the vocabulary of its machine language, is called its instruction set. These instructions, and the machine resources they can manipulate, are the raw materials of assembly language programming.

In this book we will use the instruction set of the Zilog Z80 CPU. However, the assembler we will use was originally designed to handle the simpler instruction set of the Intel 8080 CPU, and that causes some problems.

Intel's 8080 CPU was the first microprocessor to have an instruction set with enough power to be useful for general data processing. Its successor, the very similar 8085, is still a useful machine.

The 8080 offers us a very small set of hardware resources with which to work. The first one is an address space of 65,536 bytes of RAM, or "memory," or (as I prefer to call it) working storage. The CP/M operating system preempts two portions of this. It reserves the first 256 bytes, and its code occupies the highest addresses -- typically the top 8 or 12 kilobytes. The remaining addresses are available for our programs and their data.

A second resource is an 8-bit accumulator called register A. Except for loading and storing data, almost everything that the machine does, it does in register A. Only in register A, for instance, may we perform decimal arithmetic or manipulate the bits of a byte.

A third resource is a group of four 8-bit registers known as B, C, D, and E. These may be used to hold single bytes, or they may be paired as BC and DE to hold 16-bit words. In that guise they can be used as addresses for loading and storing a byte from register A. These registers may be incremented or decremented, but they can't receive the result of any other arithmetic operation.

A fourth resource is another pair of byte registers, H and L. Paired as the HL register, these can be used as a 16-bit accumulator. That is, the unsigned 16-bit word in HL may be added to itself, or the BC or DE word may be added into it. The contents of DE and HL may be swapped in a single instruction (but not the contents of BC and HL).

An important feature of the 8080 is that the storage byte whose address is in HL can be used as if it were another 8-bit register on a par with B, C, D or E. In the assemblers used here, that byte is referred to as register M.

A fifth resource is a pushdown stack of words in working storage. The CPU contains a 16-bit register, the stack pointer, which points to the last byte pushed onto the stack. Any of the register pairs may be pushed on the stack, which grows downward (from larger to smaller addresses). A useful oddity of the instruction set is that the word in HL may be swapped with the top word on the stack in one instruction.

The Intel company specified an assembly syntax for the 8080's instructions. Now, remember that there is no necessary relation between the binary bytes that the CPU reads on one hand, and the syntax rules of the assembler that prepares those bytes on the other. The assembly language is merely some engineer's idea of a concise, readable way to write down instructions.

The syntax Intel chose was simple. We might quarrel with some of the choices for operation code symbols -- the use of mov (move) where most other assemblers use "load" can be confusing, and if they had to use it, why couldn't they have spelt it out in full? -- but it is much too late to quibble.

The CP/M operating system was designed to support the 8080, and asm, the assembler that was part of it, naturally supported the Intel syntax for instructions.

Zilog designed the Z80 CPU as a faster, more powerful replacement for the 8080, and it was a quick success. In order to be a true replacement, the Z80 had to be compatible with the 8080; that is, it had to process the same instructions the same way, so that programs written for the 8080 could run on a Z80 without significant changes. Zilog succeeded in this. The machines differ in certain very subtle points about the way the flags are set after certain operations, but it's a rare 8080 program that won't run on a Z80.

The Z80 provides us with more resources. The most important ones are the IX and IY registers. These two 16-bit registers are used to address storage, just as the HL register can address a byte as "register" M. However, while we can reach only one byte through HL, we can reach any of 128 bytes on either side of the address in IX or IY.

The best use of an index register is to hold the base address of a block of related data. Then any byte of the block is just as accessible as any other, and any of them may be used as an operand in an arithmetic or logical instruction. If you persevere into Part II of this book, you'll find that the file-handling subroutines make extensive use of IX to access a block of information about the present state of some file.

The Z80 added yet more features to those of the 8080. It can test, set to 1, or set to 0 any single bit in any byte register. And while the 8080 can shift and rotate the bits of register A, the Z80 extends these operations to all registers. The Z80 can also subtract, as well as add, unsigned words into register HL. That makes it a lot easier to write subroutines for general binary arithmetic.

The repeating instruction was another Z80 innovation. It has six of them, each amounting to a short subroutine wrapped up in a single operation code. The ldir instruction is the best-known; it allows us to copy a block of storage from one place to another in a single operation. In the discussion of string operations in chapter 14 you will find an analysis of the use of Z80 operations like ldir versus the comparable 8080 loops.

The 8080 gives us a set of conditional jump instructions. The Z80 supplements these with a group of "relative" jumps. While an 8080 jump always specifies the full, 16-bit address of the place to jump to, a relative jump contains only an 8-bit number from -128 through +127. When the jump is taken, this offset is added to the present instruction address.

Relative jumps have three advantages. The first is that they are "position independent"; that is, a block of code that contains only relative jumps may be moved to a different place in storage and still work, whereas if an 8080 jump is moved, the address in it has to be modified. The programs in this book don't move around in storage, so position independence doesn't mean much to us.

The second advantage is that a relative jump is one byte shorter than an 8080 jump. That can be important in some rare applications, but if every jump in this book were relative, the programs wouldn't shrink by as much as ten percent.

The final advantage is an esoteric one. As the Z80 does it, an 8080-style jump always takes the same amount of time (ten clock cycles) whether or not the jump condition is satisfied. A relative jump, however, takes less time (seven cycles) if it is not taken, and more time (twelve cycles) if it is taken. So, in code that is fine-tuned for speed, we might write a relative jump whenever we expect the branch to be taken rarely, and an 8080-style jump when we expect it to be taken more often than not.

One relative jump, the djnz (decrement and jump if not zero), is especially useful. This instruction decrements the contents of register B and performs a jump if the result is nonzero. It is just the ticket for controlling small loops, and we'll use it a lot.

After designing a CPU that was so closely compatible with Intel's, the engineers at Zilog went on to specify an assembly syntax that was utterly different. It may have been that the engineers came from different backgrounds, with the Intel people following a DEC tradition while the Zilog folks worked to an IBM model. Whatever the reason, Zilog's official assembly syntax for the Z80 bears no resemblance to Intel's, even where the machine instructions are identical in binary value and operational effect.

Good things may be said of the Zilog assembly syntax. It is consistent; that is, similar operations have similar syntax. The Intel engineers, for example, seemed to think that there was something fundamentally different between loading register A from the byte addressed by HL, which they coded as

mov a,m

and loading it from the byte addressed by BC, represented as

ldax b

At Zilog, they saw these as the same fundamental operation and encoded them as

ld a,(hl)

ld a,(bc)

respectively. Either scheme works, since in any case we programmers soon learn the instructions by heart and think of them by their effects, not their encodings. But two incompatible traditions were established.

That created a problem for the companies that built computers and software based on the Z80. Those that already had an investment in 8080-style software often chose to ignore the Zilog syntax. Instead, they extended the Intel assembly format to cover the additional registers and operations of the Z80. One such company, now defunct, was Technical Design Labs; you will still sometimes see references to "TDL syntax" or to their "TDL assembler," an assembler that was distributed by several companies.

Companies that started out with the Z80 adopted the Zilog style from the start. Software for Tandy Corporation's TRS-80 line, for example, has always used Zilog syntax.

Digital Research Inc., the publisher of CP/M, chose neither course. It didn't supply a Z80 assembler in any form. Even today, the three 8-bit assemblers from Digital Research will accept only 8080 instructions. However, two of these assemblers, mac and rmac, are macro assemblers. We'll consider macro assembly in the next chapter; for now it is enough to say that one advantage of a macro assembler is that you may design your own instruction set. That's what Digital Research did; they supply a library of Z80 instruction macros as a standard part of the assembler package. You include that library in an assembly; then you may write Z80 instructions in extended-Intel syntax.

This is the form of Z80 instructions that you will find in this book: the form established by the Digital Research macro library. Except for the relative jumps, such instructions are rare in the programs of Part I. The 8080 instructions were adequate for almost everything done there. In the toolkit presented in Part II, however, the Z80 facilities are used a great deal.

If you are not as familiar with the Z80's instructions as you'd like to be, join me in an exercise. When I am confronted with a new CPU, I get a handle on it by cross-tabulating its instructions in different ways, looking for relationships between them. If you'll do that for the Z80 instructions I guarantee you'll uncover a few surprises.

In the first table, document all the possible ways of copying a single byte from a source to a destination. The possible sources and destinations include

Table 1-1 is a start at such a tabulation. Its columns represent sources and its rows, destinations. The single instructions that copy a byte have been filled it. Omitting the entries on the diagonal, how many of the missing squares can you fill in with sequences of instructions, and how short can you make the sequences?

If your patience has held up through that exercise, make a similar table for the instructions that will copy a 16-bit word between

Table 1-2 is a start at this 25-entry table. Note how few one-instruction entries there are, and how many are unique to the Z80. Can you find short sequences to fill in the empty entries? Spending a few hours constructing such tables is one of the best ways I know of to feel at home with the resources of a CPU.

There is one one other resource on which our programs may draw. The CP/M Basic Disk Operating System, or BDOS, is present in the machine along with our program. It can perform a number of services, and will if we invoke it correctly. The beep program of Figure 1-1 calls on the BDOS.

The BDOS is located at some address in high storage; that is, it begins at an address higher than the end of our program. We don't know what that address is. It varies from system to system, and it can vary from one command to the next in a single system, although it normally doesn't. However, there is always a jump instruction at location 5 (address 0005h) which is aimed at the lowest instruction in the BDOS. To call upon the BDOS, we need only place the correct arguments in registers and call location 5.

One BDOS argument is a service number that specifies what we want done. It is always passed in register C. The other argument depends on the service being requested, but if it's a byte, it is passed in register E, and if a word, in DE. In beep, the service is to type a byte at the system console. The second argument is the byte to be typed; it's passed in register E.

In Part I of this book, the programs interact with the BDOS only at second hand, through the subroutines of the toolkit. In Part II we will employ many BDOS services in designing the toolkit itself

We turn now to the tools of the craft of programming. The first is a mental tool: the process of abstraction, a supremely important ability in a programmer. First we create abstractions; then we implement them by exploiting the features of the assembler and by creating subroutines and macros.

At the level of machine language, there is only one kind of data, the binary byte. A byte or a set of bytes can be made to represent anything whatever. If we choose, a byte may stand for eight one-bit flags, or a small integer, or a character. A group of bytes can represent a sentence, or a program, or a floating-point number, or a screen image, or a table of addresses.

Such representations are abstractions. We abstract the concept of a number, a table, a screen image; then we impose that concept on the homogeneous bytes of machine storage. "This block of storage contains a message," we decide, "and this one contains a table of numbers." The hardware takes no notice of such decisions. We implement them by writing instructions that treat the first block as a message and the second as a table -- but not, we hope, vice versa.

We use abstract types in any program. Look at the beep program again (Figure 2-1). Even in this trivial exercise I can identify three abstractions. There is "the set of valid BDOS service request numbers." One of those is 2, the request to display one character on the screen. There is "the set of ASCII control characters." One of those is 7, the BEL character. Finally, there is the BDOS itself, the abstraction of a program that will perform a function given certain parameters. This last is not an abstract data type but rather a functional abstraction, the concept of a particular action.

; BEEP -- toot the console

ProgramBase equ 0100h ; start of any program

BdosJump equ 0005h ; entry to the BDOS

BdosType equ 2 ; request to type 1 byte

AsciiBEL equ 7 ; the BEL byte

org ProgramBase

mvi c,BdosType

mvi e,AsciiBEL

call BdosJump

ret

end

Figure 2-1. A simple program written for an absolute assembler like asm

Abstractions are purely human constructions. We may decide that a set of bytes is an English sentence, but that decision is in no way part of a machine-language program. The CPU will not object if we tell it to multiply two sentences, look for a verb in a floating-point number. The assembler will not complain if we give it a program like this.

msg: db 'Hello, there'

call msg

High-level languages help us avoid mistakes like that. They do it with a variety of mechanisms for isolating one data type from another. Assembly language doesn't; it permits us to mix data types in any way we like, including nonsensical ones. However, it also gives us the means to construct our own type-handling mechanisms. The first of these is the symbolic name. We use symbolic names to make clear the distinction between one kind of data and another.

We don't do it for the computer's benefit. Look again at Figure 2-1. When it is translated to machine language, it is compressed by a factor of 40, from more than 360 bytes to just eight. This compression is the result of stripping out every bit of symbolic information. Names of operations translate to one byte; symbolic constants translate to one or two; and all comments are deleted.

Ninety percent of the beep source file is redundant -- to the CPU. But these things are not in the source file to serve the CPU; they are there to serve human purposes. In Figure 2-2 you can see a version of beep that contains the absolute minimum of symbolic information. In translation it compresses by a factor of only five. Since it is eight times less redundant, it is in some sense a more efficient representation of a computer program. However, it is a total failure as a message between humans.

org 256

mvi c,2

mvi e,7

call 5

ret

Figure 2-2. The beep program stripped of symbols.

Every program source file is a message with three destinations. One destination is the processor, which has no ability to process symbolic information. The second is yourself; you record your decisions about the abstractions being used in the program as comments and symbolic names, for quick reference as you work. The third is a future person, either the programmer that will follow you or yourself at a later time.

Since two of the three recipients of a program are human, and since the other is mindlessly unaware of the program's symbolic content, it follows that the symbolic components of the source text deserve as much attention as the purely mechanical elements.

It also follows that the best assembler is the one with the best support for symbolic information, and that we should exploit that support as thoroughly as we exploit the machine. The CP/M assemblers all allow symbolic names to be as much as 16 characters long and can handle hundreds of names in a single assembly. I've made good use of those features in these programs.

Some things and locations are such constant features of CP/M programs that we might as well have standard names for them, and we might as well find a way to avoid having to type them into every program. The assembler's maclib command gives a way to include a file of equated names.

All the programs in this book use a single macro library, environ.lib. It contains, among other things, about 50 names for common things, notably the standard CP/M storage locations and the ASCII control characters. A separate macro library, services.lib, contains names for the BDOS service requests and a service macro that encapsulates the calling mechanism. If we like, we can rewrite Figure 2-1 using these two libraries and standard names (Figure 2-3).

; BEEP -- toot the console

maclib environ

maclib services

prolog

service BdosType,AsciiBEL

ret

end

Figure 2-3. Program beep written using the toolkit macro libraries.

Neither the CPU nor the assembler have any support for abstract data types, but we programmers use them constantly. Four types are so common and so useful that they crop up in every program. This book features a toolkit of subroutines that deal with these four data types: the character, the integer, the string, and the file.

We use use the ASCII code to relate numbers (which is all that a byte can contain) to letters. Once we've decided that a certain byte will contain a character, we tend to talk as if we really could store a letter in it. We can't, of course; even when we write a sequence like this

mvi a,'x'

sta LetterByte

what we have accomplished is to store the number 120 in the byte -- a quantity that has no necessary relationship to the letter x whatsoever. That's the virtue of abstractions. Having decided on the treatment of LetterByte and having accepted the ASCII code, we can write a program in terms of these abstractions. It relieves our mental burdens considerably, as anyone will attest who has entered a program, in binary, to a primitive development system through its toggle switches.

Sometimes we have to switch between one abstraction and another on the fly. For instance, if we want to make a letter uppercase, we have to alternate between thinking of it as a letter, as a number, and as a bit-pattern. In Figure 2-4 you see a subroutine that returns the letter addressed by register HL as uppercase. There are two point-of-view switches in it.

uppit: ; returns A = m(hl) as uppercase

mov a,m

cpi 'a' ; lowercase?

rc ; (no, less than 'a')

cpi 'z'+1

rnc ; (no, >= 'z')

ani 1101$1111b ; yes, make uppercase

ret

Figure 2-4. A subroutine to make an ASCII letter uppercase.

The first five instructions do a range-check on the letter and exit if it isn't a lowercase letter. The second and third compare the letter to the constant a and return if it's less. The alphabet, as we usually think of it, doesn't recognize the concept of "less"; even so, up to this point most people would agree that the program is dealing with alphabetic letters.

In the fourth instruction we shift to another view of the letter. Here we want to test the letter against z but, in order to set the Carry flag for all cases when the letter is less than or equal to z, we has to test against a value greater than z. The expression 'z'+1 only makes sense if we think of z as a number, not a letter.

The subroutine finally makes the letter uppercase by setting its bit 5 to zero. That operation only makes sense if we think of the letter as a pattern of bits. After long experience with assembly language, I find such shifts in point of view very natural, but they do give newcomers a lot of trouble.

It is a mental strain to work with materials whose shape depends on our angle of view, and every increment of mental stress adds to the time-cost of making a program. But the strain is only present while we are designing and coding the operation. If we code it once in a subroutine like Figure 2-4, we may then forget all its internal details. The symbolic name of the subroutine becomes the abstract function "make an uppercase letter." Thereafter we need only write

lxi h,LetterByte ; point to letter

call uppit ; get it as uppercase

without regard to the details of the process. Naive writers on programming will tell you to use subroutines because they make the program smaller (the instructions don't have to be repeated at each place they are used). That's true. However, the true economy of a subroutine is that it reduces the mental load on the programmer. From that point of view, it can be economical to create a subroutine that is used only once.

The toolkit used in this book contains a number of functions that, like Figure 2-4, modify or classify single characters.

A lone character has limited uses. Often we need strings of them. A string can be a message, or part of one; it can represent one line of a file; and tables of strings are helpful for decoding complicated user input. Most high-level languages recognize the string as a fundamental data type. Assembly language does not; it supports only independent bytes. However, it gives us the tools we need to define our own string data type. We only have to decide how a string should be represented in storage.

There are two common ways of representing strings. In both, a string is a sequence of letters in adjacent bytes. In one method, the string is preceded by a count of the bytes in the string. For instance, a 6-byte string that could be as long as 10 bytes would be defined this way.

str: db 6 ; current length

db 'abcdef' ; contents

ds 4 ; unused space

This representation of a string is used by several Pascal compilers, but hardly ever in assembly language. You can spot the languages that use it; they restrict strings to a maximum length of 255 bytes because they have to fit the count into the leading byte. This string form has the advantages that it is easy to discover the string's length (just pick up the count) and it's easy to find the end of the string (add the string's length to its address). But nothing else you might do with a string is easy because you are forever patching up the length-bytes.

The other method leaves the length of the string implicit, marking its end with a special delimiter that can't otherwise appear in a string. A variety of delimiters have been used. The CP/M BDOS marks the end of a string with the ASCII currency symbol in some of its functions. Some programmers, when space is at a premium, will mark the end of a string by setting on the high bit of the last character. But the most common delimiter is the null byte, a byte with a numeric value of zero.

The same constant string (six bytes in a 10-byte string space) would be defined this way, using a null delimiter.

str: db 'abcdef'; contents

db 0 ; delimiter

ds 4 ; unused space

With this convention, it takes time to find the end of a string or to learn its length (you have to inspect each byte in turn until you find the null byte). But it is easy to copy a string (just copy bytes until you have copied the null) and to append one string to another (find the null in the first, then copy the second through the null that ends it).

It is particularly easy to compare two strings in this format. A subroutine that does it appears in Figure 2-5. One nice feature of this string format is that when one string is a leading substring of the other, the shorter one will automatically appear as "less."

; STRCMP: compare the strings addressed by DE and HL.

; Return the CPU flags set as for first minus second, and

; return the pointers updated to the first inequality.

strcmp: ldax d ; first string's byte

cmp m ; ..versus second string

rnz ; (unequal)

ora a ; equal ending nulls?

rz ; (yes, return Z true)

inx d

inx h ; try next bytes

jmp strcmp

Figure 2-5. A subroutine to compare two strings

Null-terminated strings have the nice property that every trailing substring of a string is also a string. The string abcd0 (using 0 to stand for the terminating null) is a string, but so are bcd0, cd0, and d0. So indeed is the terminal null byte; it's the null string. This property means that, for example, if we are pointing to a string that has leading blanks, we can increment the pointer past the blanks and still be pointing to a string. It might now be the null string, but otherwise it needs no special handling.

This string type is a very useful abstraction. The subroutines in this book include a comprehensive set of tools for creating and manipulating strings in assembly language.

Only two numeric data types are native to the Z80 CPU: the unsigned 8-bit integer and the unsigned 16-bit integer -- in other words, the binary byte and the binary word. The only arithmetic it can do on them is to to add or subtract them, or increment and decrement them by one. A lot can be done with only these abilities, but sometimes we need more.

Sometimes we need other kinds of numeric data. One useful type is the signed integer, a number that can record negative as well as positive values. Occasionally we need the operations of multiplication and division. Multiplication and division of unsigned values can be done with subroutines, and all four arithmetic operations on signed values require subroutines. Subroutines to multiply and divide unsigned integers, and to add, subtract, compare, multiply, and divide signed integers, are part of the toolkit developed in this book.

When two 16-bit integers are multiplied together, the result may be as much as 32 bits long. An integer of this size is an awkward thing for the Z80 to handle. It takes more than half of the CPU's registers just to hold it! Nevertheless, the data type of the 32-bit (4-byte) integer turns out to be useful, especially in file operations.

Under CP/M 2.2, a disk file may be as long as eight megabytes: 8,388,608 bytes, to be exact. Under CP/M Plus, it may be four times as large, or 33 million bytes. If we want to note our position in a file exactly to the byte -- and we do -- we must be able to note a number that may occupy 25 bits. If we want to convert a byte-address within a file to a record count, we have to be able to divide such a number by an integer.

Accordingly, our toolkit must contain at least some support for working with 32-bit unsigned integers, or "longwords" as we will call them. The toolkit routines for 16-bit multiplication return longwords; there is a routine to divide a longword by a word; and there are routines to add, subtract, and compare longwords.

These numeric types -- bytes, signed and unsigned words, and longwords -- are all binary numbers. We must have a means to convert between the binary representation of a number and the ASCII digits that we can display, print, or type on a keyboard. This is where the numeric data types and the string data type come together. The toolkit contains a set of functions to convert strings of ASCII digits into binary values, and vice versa. If we have a string of digits (either decimal or hexadecimal), we can convert those characters into the binary number they represent. If we have developed a binary number, we can convert it to digit characters and append them to a string.

The file is another abstract data type. The bulk CP/M itself is concerned with supporting this abstraction. To a CP/M program, a file is a sequence of bytes that it can create, write, read, rename or erase by calling on the BDOS with appropriate arguments.

This a great convenience. The operating system's file services shield us from a world of messy details -- we needn't worry about disk space allocation, or sector and track numbers, or sector sizes, or I/O interfaces. All we have to do is point to a CP/M file control block and say, "do it, please," and it will be done. Furthermore, the same "do it" request will work correctly in another system with different disk hardware, and under other versions of the operating system such as MP/M.

Useful though it is, the CP/M file system has some shortcomings. Although it claims to present a file as a sequence of bytes, it really stores it as a sequence of 128-byte records. There are too many places where we have shift points of view, thinking of a file at one time as a sequence of bytes and at another as a sequence of 128-byte blocks.

Another problem is the lack of device independence. Under CP/M, there is one set of service requests for dealing with files and a completely different set for dealing with the console, printer, and auxiliary devices. That's too bad, because with few exceptions these character devices could be treated just like files, and there are many advantages to doing so.

Another failing is that there is no connection between the file abstraction and the other abstract data types, especially integers and strings. We'd like to be able to read or write data in units that are strings, or words, or longwords, but the only data unit that CP/M can transfer is the 128-byte record.

There is also the problem of filespecs, the command tokens that the user enters to name a file. The user names a file as "d:filename.typ," but the program must parse that string into the file control block before CP/M can use it. Not only is this a tedious bit of processing, but the program must also check the string for validity (CP/M won't), and must check to see if it is ambiguous.

A related problem is the sticky one of file passwords. CP/M Plus supports them while CP/M 2.2 does not. Under CP/M Plus, a complete filespec is "d:filename.typ;password." The program must recognize the password and take special steps to present it to CP/M when the file is opened, or to assign it when a file is created. Furthermore, a good program should be able to tell when a file password is needed and prompt the user to enter it -- but only if it was omitted. All these filespec problems make it a major operation to get a file opened for processing.

All of these problems can be solved, and their solution is a major feature of the toolkit presented in this book. It contains a set of functional abstractions for dealing with files, abstractions that make of a CP/M file a true abstract data type that interacts smoothly with the other abstract types. The design details are complicated, and we'll defer them to the second part of the book. In the first part we will concentrate on using files.

In order to use an abstract file, you have to use assembler macros. But macros and relocation are separate topics. Let's turn to them now. We will return to files in the the next chapter.

Every assembler gives us two tools for abstraction, symbolic names and subroutines. A macro assembler gives us a third: the macro. In essence, a macro is a packet of assembler statements that we can invoke with a single line. We define the macro just once. Wherever we want that packet of lines inserted in a program, we write only the name of the macro. The assembler replaces it with the lines we defined for it.

Let's demonstrate this with an example. Recall Figure 2-4, the subroutine named uppit. Suppose that, for some reason, we expected to have to make the byte Letter uppercase at several places in a program. Furthermore, uppit doesn't affect the byte in storage; it returns the altered byte in register A. Therefore at several places in the program we are going to have to write this sequence of lines

lxi h,Letter

call uppit

mov m,a

Besides, at some of those places, we won't want the previous contents of registers A and HL destroyed when this is done.

Very well, we will write a macro and name it letterup (Figure 2-6). That done, we can write the single line letterup wherever we intend to make the value of Letter uppercase. The assembler will replace the one line with the seven lines defined for the macro. This is called "macro expansion"; the assembler "expands" our one-line macro call by replacing it with the body of the macro definition.

letterup macro ; open macro definition

push psw ; save A

push h ; save HL

lxi h,Letter; point at byte

call uppit ; get as upcase

mov m,a ; put back

pop h ; restore HL

pop psw ; restore A

endm ; close macro defn.

Figure 2-6. A macro to make the byte Letter uppercase.

There are several ways to think about what we have accomplished with Figure 2-6. The simplest is to say that we have used the assembler to save ourselves time at the keyboard, and we have.

Another way is to say that we have used the assembler to create a new machine instruction, the letterup instruction. It's a special-purpose instruction which happens to be composite, comprising seven ordinary instructions.

The most general way to think about it is to say that we have used the macro feature to create a new abstract function. As a result of our coding the macro, the name, letterup, has come to stand for the function, make-the-byte-Letter-be-uppercase. Wherever in a program we need that function, we need only code the name.

Notice that the letterup abstraction has been built upon another abstraction, the one implemented by the subroutine uppit. The macro provides the function of the subroutine plus saving and restoring registers. We will often build one abstraction on another until we have produced a whole heirarchy of them.

If that were all there was to macros, they would still be useful, but there is more. A macro can be written to take parameters (or "arguments" or "operands" -- the words should have different meanings, but they've been so abused that they mean almost the same thing).

A macro parameter is a portion of the macro that is to be supplied at the time the macro is processed. When we define the macro, we refer to the parameter by a symbolic name. When we use the macro, we supply data to be substituted for the parameter-name wherever it appears in the macro expansion.

Parameters let us make a macro general rather than specific. We can demonstrate this by rewriting Figure 2-6. A macro that operates only on a byte name Letter is specific to that label. We should define instead the macro upany (Figure 2-7). This macro takes as its only parameter the address of the byte to be made uppercase.

upany macro which ; parameter-name is "which"

push psw ; save A

push h ; save HL

lxi h,which ; point at byte "which"

call uppit ; get as upcase

mov m,a ; put back

pop h ; restore HL

pop psw ; restore A

endm ; close macro defn.

Figure 2-7. A parameterized macro to make any byte uppercase.

Compare Figure 2-6 and Figure 2-7. They differ in only two lines. In Figure 2-7, the first line notifies the assembler that this macro takes a single parameter whose name is "which." In the fourth line there is a reference to which; when the assembler expands the macro it will replace the characters "which" with whatever characters the programmer wrote following the name of the macro. At one point in the program we might write

upany Letter

with the result that the assembler will produce the line

lxi h,Letter

At another point we might code

upany String+17

and the assembler will dutifully produce

lxi h,String+17

as one of the lines of the macro expansion. In this way a macro can become a general functional abstraction.

The Digital Research assemblers support conditional assembly, a means of controlling the assembler so that different code is produced depending on the contents of the source program. This finds its greatest use within macros. One common use is in checking the parameters of macros. As shown in Figure 2-7, the upany macro doesn't check its parameter in any way. If we thoughtlessly omit the parameter,

upany

the assembler will replace the parameter name "which" with the null string, producing the line

lxi h,

Then it will discover that it can't assemble such an instruction and flag that line as an error. But the cause of the error won't be obvious. We can trap the error and issue a diagnostic message if we rewrite the macro as shown in Figure 2-8.

upany macro which ; parameter-name is "which"

if not nul which

push psw ; save A

push h ; save HL

lxi h,which ; point at byte "which"

call uppit ; get as upcase

mov m,a ; put back

pop h ; restore HL

pop psw ; restore A

else ; "which" was null

+++ Address of byte is required

endif

endm ; close macro defn.

Figure 2-8. A parameterized macro with a check for an omitted parameter.

The assembler will expand all of the lines of the macro, replacing every occurrence of which with the given parameter, just as before. After expanding all eleven lines, it will process them just as if they had been written into the program at that point.

Now, however, the lines comprise a conditional assembly -- one bounded by if, else, and endif lines. The assembler will process the if line, which instructs it to test a condition. The condition in this case is "not nul" followed by whatever parameter was given. That condition is satisfied if any nonblank characters follow the word nul. If a parameter was given, the condition is true, and the assembler continues working up to the point at which it finds the else line; then it skips lines until it finds endif. The effect of the macro expansion will be exactly the same as if we had used the macro of Figure 2-7.

If we omit the parameter, however, something different will happen. The assembler will still expand all eleven lines of the macro body and begin to process them. But the condition of the if line will not be satisfied. After expansion, it will consist of only

if not nul

because no parameter was given and so which was replaced with the null string.

Since the condition is not satisfied, the assembler will begin skipping; it will not assemble anything until it has seen the else line. When it begins assembling again, it will find the line

+++ Address of byte is required

That is not a valid assembly statement. It's a deliberate error. The assembler will display it with an error flag. The line doesn't say anything to the assembler or the CPU, but it gives the programmer a better handle on the source of the problem.

The conditional assembly feature can be used within macros to make them more useful or more flexible. To see how, let's rewrite the upany macro once more.

As written in Figure 2-8, the macro abstracts the idea of "make a named byte in storage uppercase." That might be handy, but there are times when we want to make a byte uppercase when we can't refer to it by a symbolic expression. Perhaps we have computed its address by adding together a base address and some other factor. Now its address is in register HL, but we can't give a symbolic name for it, and therefore can't use the macro. We could just write the subroutine call instead:

push psw ; save A

call uppit

mov m,a

pop psw

Besides being tedious, that might confuse a later reader who would waste time figuring out why we didn't use the macro here.

We'd like the macro to handle this case. We need a way to tell it that the address has already been loaded into HL and therefore it needn't generate the instructions to save and load HL. The only way to do that (with the Digital Research assemblers; others have better methods) is to define a special symbolic name, and to test for that name in the macro. Suppose that, early in the program, we had defined the name +H to the assembler:

+H equ 0FFFFh

If we can assume that's been done, we can rewrite the macro as shown in Figure 2-9.

upany macro which ; parameter-name is "which"

if not nul which

push psw ; save A

if which ne +H

push h ; save HL

lxi h,which ; point at byte "which"

endif

call uppit ; get as upcase

mov m,a ; put back

if which ne +H

pop h ; restore HL

endif

pop psw ; restore A

else ; "which" was null

+++ Address or +H required

endif

endm ; close macro defn.

Figure 2-9. A macro that uses conditional assembly.

If we give this macro a parameter that is any expression other than +H, it will produce exactly the same lines as the one in Figure 2-8. But if we invoke it with the line

upany +H

the assembler will find the conditions of the two inner if statements to be false. As a result, it will skip assembling the lines that save, load, and restore register HL. The special parameter +H serves to tell the macro, "don't bother saving and loading the address, I've done it for you."

(This technique works with the assembler named mac, but it does not work as shown with rmac. A more involved method, described in Part II, is really required.)

All of these macro techniques, and some that haven't been mentioned, have been put to use in building the tools in this book. To begin with, every function in the toolkit is accessed by way of a macro. These macros validate their parameters as fully as they can, and produce specific messages when necessary parameters are omitted. Most of them use the technique of special names for registers.

The contents of the macros are explained at length in Part II of the book. However, you don't have to know their contents in order to use them as we will be doing here in Part I; you only need to know how they are employed.

The toolkit macros are defined in a single macro library named environ.lib. It is made part of an assembly with the single statement

maclib environ

The assembler primes itself with the toolkit macros before continuing with the assembly. The environ maclib contains

The macros, their functions, and their parameters are listed in an appendix and are summarized for quick reference on the end-papers of the volume.

Simple assemblers like asm provide symbolic names and conditional assembly. Macro assemblers like mac add the ability to define and use macros. There is a third level of capability and it, too, enhances our ability to create abstractions. This is the ability to construct independent modules, and to create a final program by linking an assortment of modules together. An assembler that supports this is commonly called a relocating assembler -- although relocation is the least important part of it.

To a simple assembler, a program is a single source file and it is assembled in a single run of the assembler. There is no relation between the code produced in one assembly and that produced in another. As a result, the output of an assembly has to be a complete, self-contained program. Every function required by that program has to be part of its source text, because there is no way (or at least, no easy way) to combine the output of two different assemblies into a single program.

A relocating assembler does not force this assumption on us. The input to a relocating assembler does not have to define a self-contained program. It may be the source of a module: a portion of a program that is logically separable from other parts, but not complete in itself. The assembler provides three features that, together, make it possible to link together the output of many assembly runs into a single program.

The first two features have to do with symbolic names. We may tell the assembler that a particular name is external, that is, not defined by any statement in the current source file. The assembler takes it on faith that the given name will be defined somewhere, sometime, in another assembly. It includes in its output a note to the effect that name so-and-so is required to complete this module. It also writes notes to designate all the places in the object code where the address of name so-and-so is used in an instruction.

The complement to an external name is a public name. A name that is defined within the current source file may be be designated as public. This tells the assembler that access to the name will be required by other modules. The assembler does nothing special with the name except to place in its output a note to the effect that name such-and-such is defined at a certain offset in this module.

These special name-handling features are almost enough to allow modularity. Already you can imagine a program that would read the object code of two or more modules and splice together their name-references. It could see what names were defined as public, and what names were required as external references; then it could supply to the external references the addresses that correspond to their definitions.

Let's illustrate this with a simple example. We will begin by converting our uppercase subroutine, Figure 2-4, into a complete assembly source file (Figure 2-10). This requires only the addition of a public statement to make the entry-point label of the subroutine available to other modules.

Two other, optional, lines have been added in Figure 2-10: a name statement causes the module's name to be part of its object file, and an end statement tells the assembler the source is complete. The assembler doesn't require the end line; the physical end of the file would serve. The name statement names the module within an object library.

name uppit ; module name

public uppit ; entry-label is public

uppit:

mov a,m

cpi 'a' ; lowercase?

rc ; (no, less than 'a')

cpi 'z'+1

rnc ; (no, >= 'z')

ani 0ffh-20h; yes, make upper

ret

end

Figure 2-10. The uppit subroutine as a complete module.

If it were placed in a file uppit.asm, the source text shown in Figure 2-10 could be assembled by rmac. The output would be another file, uppit.rel, which defines the object code of the subroutine. It isn't a complete program; it doesn't do anything that would be useful as a CP/M command. It is, however, a logically-separable part of a program, one that realizes the functional abstraction of converting a letter to uppercase.

Now we need a way to combine this module with another program. Suppose we were writing a program in which we had need of uppit. Here is part of it:

extrn uppit

lxi h,LetterByte

call uppit

The extern statement tells the assembler that the name uppit is not defined anywhere in the current source file. Instead we promise, cross our hearts and hope to die, that the name uppit will be defined as public in some other source file. The assembler believes us. When it assembles the call uppit statement, it doesn't try to put an address in the call instruction. It puts only zeros there, and inserts a note in its output saying "this call instruction won't work until it has been patched to point to something called uppit."

The program that weaves together these interlocking name-references is called link; it is part of the rmac software package. It processes the output of one or more assemblies to produce a runnable program. We could finish our program with the command

A>link program,uppit

The link program would read program.rel and uppit.rel and combine them. It would note the public name uppit in one and the external reference to the same name in the other, and it would patch the call instruction so that it pointed to the code of the uppit subroutine. Its output would be the file program.com, ready to execute.

Notice what this gives us. The subroutine uppit only needs to be written once; in it, we put our idea of how a certain abstract function is to be implemented. It needs to be assembled only once, also. After all, once we have defined how to do this task, how often will we need to change it? The tiny file uppit.rel is all we need. In any program where we need the function, we can write an extrn statement for uppit, and include uppit when we link the program. Even that can be simplified, as we'll see.

But we have left a loose end. We actually use uppit by way of the macro upany (Figure 2-9). It's the macro name that represents the function to us, not the subroutine name. It's all too likely that we'll employ the macro without remembering to declare the subroutine as external. If we do, the call statement in the macro expansion will produce an assembly error type "U" because the name uppit will be undefined.

This is easily fixed. All we have to do is to add the extrn statement to the macro body (Figure 2-11). When the macro expands, the statement will be processed. The assembler will note the need for uppit in the object file, the linker will add it to the finished program, and all will be well. If we use the macro several times, several extrn statements will be generated. That causes no problems with the Digital Research assembler and linker, although other products might prohibit it.

upany macro which ; parameter-name is "which"

if not nul which

push psw ; save A

if which ne +H

push h ; save HL

lxi h,which ; point at byte "which"

endif

EXTRN UPPIT

call uppit ; get as upcase

mov m,a ; put back

if which ne +H

pop h ; restore HL

endif

pop psw ; restore A

else ; "which" was null

+++ Address of byte is required

endif

endm ; close macro defn.

Figure 2-11. A macro that calls an external subroutine.

I have glossed over the linker's other task, relocation. Consider: at the time it is processing a source file, the assembler cannot possibly know where the object code is going to be located in storage. Nor can we know it. Oh, if the source file represents the main part of a CP/M command, we know that it will have to be located at 0100h, the base of the CP/M transient program area. But when we are assembling a subroutine like the one in Figure 2-10, neither we nor the assembler can tell where the code will finally reside. It depends on the size of the program to which the subroutine will be appended. By the same token, the assembler can't predict where an external name will be when the program is finished.

Many Z80 instructions, especially jumps and calls, contain operands that are 16-bit address values. These addresses depend on the final location of the code that they refer to. Yet the assembler cannot know where the code will be! How can it finish these instructions?

The answer, of course, is that it doesn't finish them. It leaves room in the object code for the addresses, but it doesn't try to put values in them. It does include notes in the object file that say, in effect, "this word refers to an offset of x bytes within this module." Everything else is up to the linker.

The linker is the program that knows the final location of each piece of code. It puts the first .rel file at address 0100h; all the rest follow it. As it prepares each module, link updates all the addresses so they refer to the location of their final targets.

The rmac product is composed of three programs. We've already discussed the assembler, rmac, and the linker, link. The third program is a library manager, lib.

Its purpose is to build and maintain libraries of object modules like uppit.rel. It is easy to accumulate a collection of dozens, even hundreds, of useful little modules. It is awkward and inefficient to keep each one as a separate .rel file and to name it in the link command. Instead we collect them in related groups as object libraries ("relocatable libraries" in the Digital Research documentation). The lib command is used to build an object library, to list its contents, or to replace a module within a library.

The lib program will build either of two types of library files. Both have the same contents, a collection of modules. The simplest is just a concatenation of the modules that go into it. Such a library has the filetype .rel.

The second type is called an "indexed relocatable library" and has the filetype .irl. A file of this type commences with a table of module-names and the file offsets at which they commence. Both the lib and the link program can use this tabular index to locate a module quickly.

Suppose that we already had an object library named misc.irl. We could add the file uppit.rel to the library with this command.

A>lib misc.irl[i]=misc.irl,uppit

The lib program's peculiar syntax becomes more understandable if you keep in mind that it always builds a new file from one or more old files. The new file is named on the left of the equals sign. The old files that go into it are named in a list to the right of the equals sign. The command above can be read as "Lib: create misc.irl (which is to be an indexed relocatable library) from the contents of misc.irl plus uppit.rel."

Notice that giving a filetype of irl to the new file is not sufficient. You have to include the option [i], or lib will build an ordinary library.

Should we alter uppit and reassemble it, we could update it in the library with this command.

A>lib misc.irl[i]=misc.irl<uppit>

Read that as "Lib: create misc.irl (which is to be an indexed relocatable library) from the contents of misc.irl, replacing the module whose name is uppit with the file named uppit.rel." (We put name statements in module source files, as in Figure 2-10, so we can refer to them in such commands.)

When we have uppit in an object library, we can link a program that uses it with this command.

A>link program,misc.irl[s]

The option [s] after the library name means "selective search"; it tells link to include only the modules from this library that are specifically required. Lacking the option, link will include everything in the library whether it is referenced or not.

The toolkit used in this book is kept in one object library, environ.irl.

We have achieved the acme of abstraction allowed by the Digital Research assembly products. We have defined an abstract function, that of making a byte uppercase. We implemented the simplest, most general case of the abstraction as assembly source, then stored its object code in an object library.

We defined a macro as an interface to the abstract function. The macro builds on the simple subroutine by saving, loading, and restoring registers. The macro also declares the subroutine as external so that link will include it.

When these steps have been done, all we need to do in order to use the abstract function is to include the macro library that defines it in our programs. Well, we do have to remember the name of the macro and what it wants in the way of parameters. However, all that's needed to invoke the function is to write of the macro name and its parameter. The macro will take care of setting up the registers that the subroutine needs. The assembler will note that the subroutine is needed. The linker will search for it and append it to the linked program.

In the next chapter we will experiment with these tools by writing some simple file-copy programs, and we will study some of the features of the file abstract data type

We have one more element of the programming craft to discuss. A craftsperson draws a plan before starting work. That step is crucially important in programming. Here we will write write a small program, using it as an example of planning and of using the toolkit macros.

For a first exercise, I propose a program that is very simple, a mere finger-exercise, yet one that is quite hard to do well in any but assembly language. Furthermore, I have seen programs like it advertised for sale for $25, so right here we are going to justify the cover price of this book.

The purpose of the program is to accept lines of input from the keyboard and duplicate them on the printer -- in other words, to turn your expensive CP/M system into a mere typewriter. There are good uses for such a program. You can use it to address an envelope, for instance, or to put a note on a listing.

It is possible to drive the printer from the keyboard using standard CP/M commands. The Control-P keyboard signal makes the BDOS echo each command and its output on the printer. That has its uses, but it won't let you address an envelope; at least, not unless you want each line of the address to commence with "A>."

You can drive the printer from the keyboard with pip by giving the command

A>pip lst:=con:

Unfortunately, pip reads keyboard input one byte at a time. When a program does so, none of the normal input editing keys work. If you make a typo, the typo is printed at once. If you backspace to correct the typo, the backspace is sent to the printer, followed by the overstruck correction. (Many printers ignore backspaces, so both letters appear, making things even worse.) Furthermore, pip echoes only the keys it receives. In particular, when you end a line with the Return key, only the Return code is sent. The Linefeed code that CP/M normally adds isn't sent. So you have to press both Return and Linefeed at the end of each line.

What we want, then, is a program that will read complete lines from the keyboard, permitting the full range of line editing that the BDOS supports. Support for line editing is especially important with CP/M Plus, which allows you to make corrections at any point in the line. It's still useful with CP/M 2.2, which at least permits you to backspace to an error. When the line is complete, the program is to copy it to the printer. This process is to be repeated until we are done.

The preceding paragraph is a plan of sorts, but it has to be formalized. In Figure 3-1 you will see a first pass at a formal plan for the typist program.

typist

Keyboard is a file that represents the console.

Printer is a file that represents the printer.

Line is a space for a string of characters.

repeat

read a line from Keyboard into Line

write Line to Printer

until done

end.

Figure 3-1. First-draft pseudo-code of typist.

That plan is rendered in pseudo-code, a notation for planning programs. A pseudo-code is any notation that mixes the few, rigid, control structures allowed by good programming practice with free-form words whenever we want to defer thinking about irrelevant details.

A pseudo-code plan is for use by people, not by programs, so it may use any notational conventions you like. My personal pseudo-code style mixes English with forms taken from real programming languages. You are free to develop your own pseudo-code style, one that suits you taste and the way you think. The style should force you to state the shape of the problem in the formal, explicit manner of a programming language while not impeding your thinking with excess detail.

There are four reasons to write a plan in pseudo-code. The first is that it forces discipline on a verbal specification, giving it structure. That structure reveals much more precisely what the program will entail. Often a problem that sounds simple in English turns out to be very difficult to do. It's better to find that out while planning than while coding.

The second reason is that we make discoveries as we labor to translate fuzzy words into the strict forms of a program. We discover which things in the program will be hard to do and which easy. We discover that down here we will need to use something that we could have stored back up there. We discover that we will have to do the same procedure at three different places, and therefore it should be a subroutine. Or we discover that we have to do in this program something that we already worked out while planning an earlier one.

The third reason is that we can experiment with a pseudo-code plan. It's close enough to a program that we can make initial judgements about the algorithm we will use. We can write two versions of a procedure and decide quite accurately which version will run faster or use more working storage. We can erase, cross out, revise in every way; and we can do it with much faster "turnaround time" than if we were doing the same experiments in a real programming language. The flexibility and power of the pseudo-code "editor" and "compiler" are really amazing when you consider that they consist of nothing more than a yellow pad and a felt-tip pen.

The last reason is that the actual program is much easier to write when we can work from a good pseudo-code plan. One reason assembly language is hard to use is the number of niggling details that we have to keep track of: the contents of the registers, the correct jump condition to use, and so on. But these matters are local problems that can be worked out while looking at a span of code no larger than one screenful. They aren't difficult to solve, provided we are not at the same time trying to solve global, algorithmic problems. It's just not possible to work out the correct use of the stack and the shape of the main loop at the same time. But if you have made all the big decisions and recorded them as pseudo-code, the coding details are easy to cope with.

Let's return to the design of the typist program. The first draft of a plan appears in Figure 3-1, and it is nearly enough to code from. (I can say that because I know there are toolkit macros that correspond to each of the major lines in Figure 3-1.) There's only one change that should be made in it. The loop condition, "until done," is vague. When is "done"? The answer requires a digression on the nature of the file abstract type.

One of my objectives in designing the file-handling tools was that all files were to be handled alike, whether they were disk files or CP/M character devices. As much as possible, an input file was to be an input file regardless of whether its contents came from a disk, or the keyboard, or a modem. And it worked out that that could be done (the details are in Part II).

Another principle was there were two types of files, ASCII and binary. An ASCII file contains ASCII characters grouped in lines, and its end is marked by the Control-Z byte or by physical end of file, whichever comes first. A binary file contains any sort of bytes; has no internal structure except as the program imposes one; and it ends only at physical end of file. The difference between these two types of file lies entirely in how the program treats them. If it reads a file with ASCII-type macros, the file will be given ASCII-type treatment; if not, not.

Now, it is my plan in typist to treat both the console and the printer as ASCII files. That means that the end of the input file will be marked by receipt of a Control-Z byte. (It can't be marked by physical end of file, because there is no such thing as the "end" of a device.) The functions that read ASCII data return a signal when they find a Control-Z byte.

That is what "until done" amounts to, then: the appearance of logical end of file on the input, as signaled by the function that reads a line. We can express this in the plan by rewriting it as shown in Figure 3-2. In it, the pseudo-keyword break means "exit the loop right now."

typist

Keyboard is a file that represents the console.

Printer is a file that represents the printer.

Line is a space for a string of characters.

loop

read a line from Keyboard into Line

if end-of-file, break

write Line to Printer

repeat

end.

Figure 3-1. Final draft pseudo-code plan of typist.

Since it doesn't do any processing of the data, the typist program consists almost entirely of calls on toolkit functions. As a result, its assembly code (Figure 3-3) is almost a line-for-line translation of the plan. Read it now, then let's examine it together.

; TYPIST -- echo keyboard lines to printer

maclib environ

dseg

linesize equ 128 ; max line we will read

keyboard:

confile

printer:

lstfile

line: strspace linesize

cseg

prolog

loop: fgetstr keyboard,line,linesize

rz

fputline printer,line

jmp loop

end

Figure 3-3. Source code of the typist program.

Many of the lines of the program are calls on toolkit macros. In a minute we will talk about these in the order they are used. Remember, however, that all of these macros are documented in the Appendix to this book.